- Help Center

- Machine Learning

- Supervised

-

Data Science Bootcamp

-

Large Language Models Bootcamp

-

Agentic AI Bootcamp

-

Registration

-

Pricing

-

Community

-

Python Programming

-

Platform Related Issues

-

Bootcamps

-

Homework and Notebooks

-

Free Courses

-

Data Science for Business

-

Practicum

-

Blog

-

Employment Assistance

-

Machine Learning

-

Data Analysis

-

R Language

-

Python for Data Science

-

SQL

-

Introduction to Power BI

-

Power BI

-

Programming and Tools

-

Partnerships

How do you calculate the MSE in a linear regression model?

Mean squared error (MSE) measures the amount of error in statistical models. It assesses the average squared difference between the observed and predicted values. When a model has no error, the MSE equals zero. As model error increases, its value increases. The mean squared error is also known as the mean squared deviation (MSD).

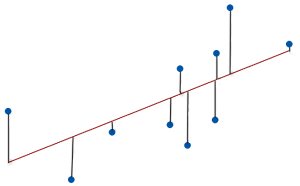

For example, in regression, the mean squared error represents the average squared residual

As the data points fall closer to the regression line, the model has less error, decreasing the MSE. A model with less error produces more precise predictions

MSE Formula

The formula for MSE is the following.