- Help Center

- Machine Learning

-

Data Science Bootcamp

-

Large Language Models Bootcamp

-

Agentic AI Bootcamp

-

Registration

-

Pricing

-

Community

-

Python Programming

-

Platform Related Issues

-

Bootcamps

-

Homework and Notebooks

-

Free Courses

-

Data Science for Business

-

Practicum

-

Blog

-

Employment Assistance

-

Machine Learning

-

Data Analysis

-

R Language

-

Python for Data Science

-

SQL

-

Introduction to Power BI

-

Power BI

-

Programming and Tools

-

Partnerships

what is backpropagation?

Backpropagation is the essence of neural network training. It is the method of fine-tuning the weights of a neural network based on the error rate obtained in the previous epoch (i.e., iteration). Proper tuning of the weights allows you to reduce error rates and make the model reliable by increasing its generalization.

Backpropagation in neural network is a short form for “backward propagation of errors.” It is a standard method of training artificial neural networks. This method helps calculate the gradient of a loss function with respect to all the weights in the network.

The Back propagation algorithm in neural network computes the gradient of the loss function for a single weight by the chain rule. It efficiently computes one layer at a time, unlike a native direct computation. It computes the gradient, but it does not define how the gradient is used. It generalizes the computation in the delta rule.

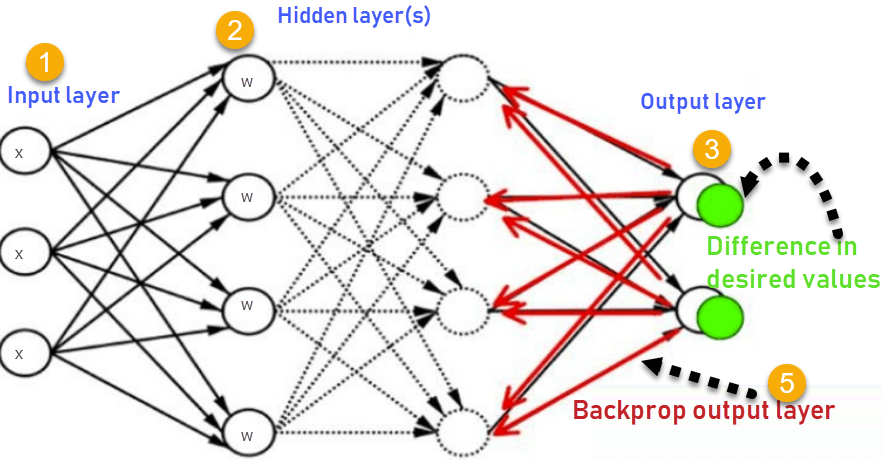

Consider the following Back propagation neural network example diagram to understand:

- Inputs X, arrive through the preconnected path

- Input is modeled using real weights W. The weights are usually randomly selected.

- Calculate the output for every neuron from the input layer, to the hidden layers, to the output layer.

- Calculate the error in the outputs

- Travel back from the output layer to the hidden layer to adjust the weights such that the error is decreased.

ErrorB= Actual Output – Desired Output

keep repeating the process until the desired output is achieve